By Dan Chokoe – Full Stack IT Engineer

Introduction

Hey fellow developers! Dan here. After spending countless hours building web applications and managing infrastructure, I’ve recently dove headfirst into the fascinating world of AI agents. Today, I want to share a hands-on approach to creating a simple but powerful AI agent on Ubuntu that can actually be useful in your daily workflow.

What We’re Building

We’ll create an AI-powered file organizer agent that:

- Monitors a directory for new files

- Analyzes file content using local AI

- Automatically organizes files into appropriate folders

- Provides intelligent naming suggestions

Prerequisites

# System requirements

Ubuntu 20.04+ (I'm running 23.10 Mantic)

Python 3.8+

At least 8GB RAM (16GB recommended)Step 1: Environment Setup

First, let’s set up our development environment, run the below commands on your Linux terminal:

# Update system

sudo apt update && sudo apt upgrade -y

# Install Python dependencies

sudo apt install python3-pip python3-venv python3-dev -y

# Create project directory

mkdir ~/ai-file-agent && cd ~/ai-file-agent

# Set up virtual environment

python3 -m venv venv

source venv/bin/activate

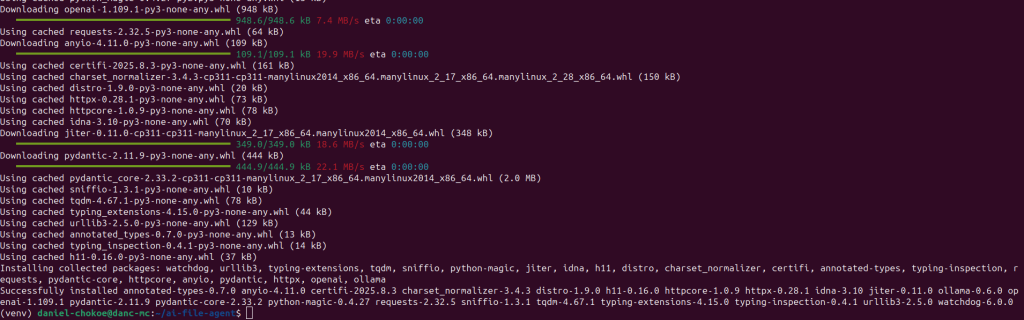

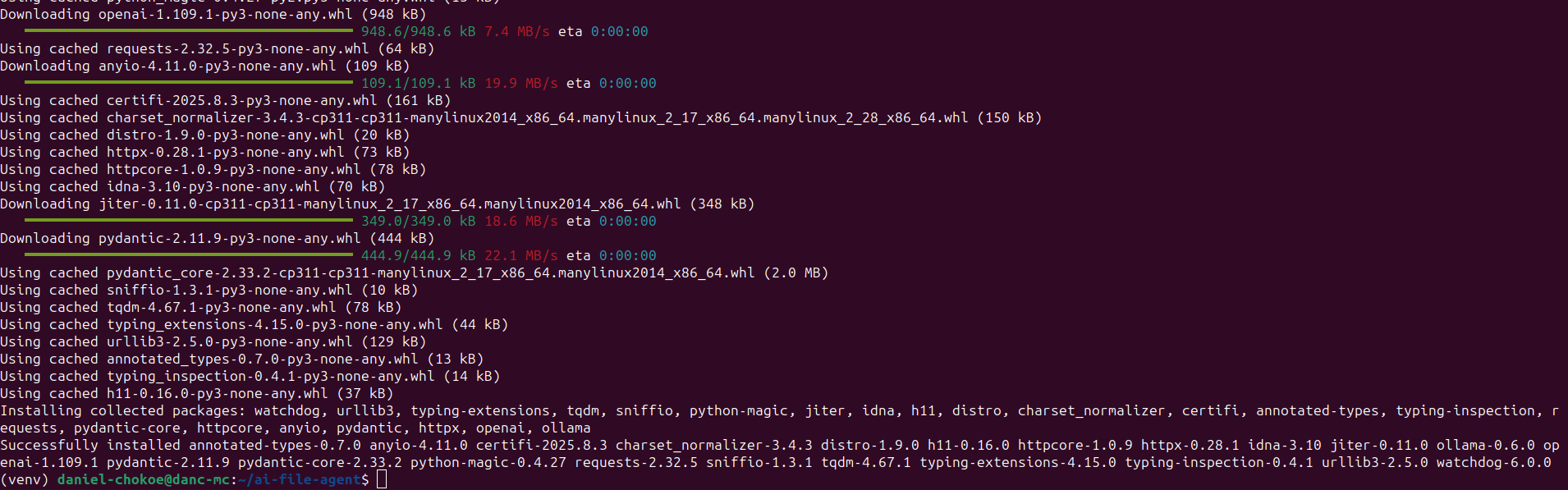

# Install required packages

pip install ollama watchdog python-magic openai requestsStep 2: Install Ollama (Local AI)

As a systems guy, I prefer keeping AI models local when possible:

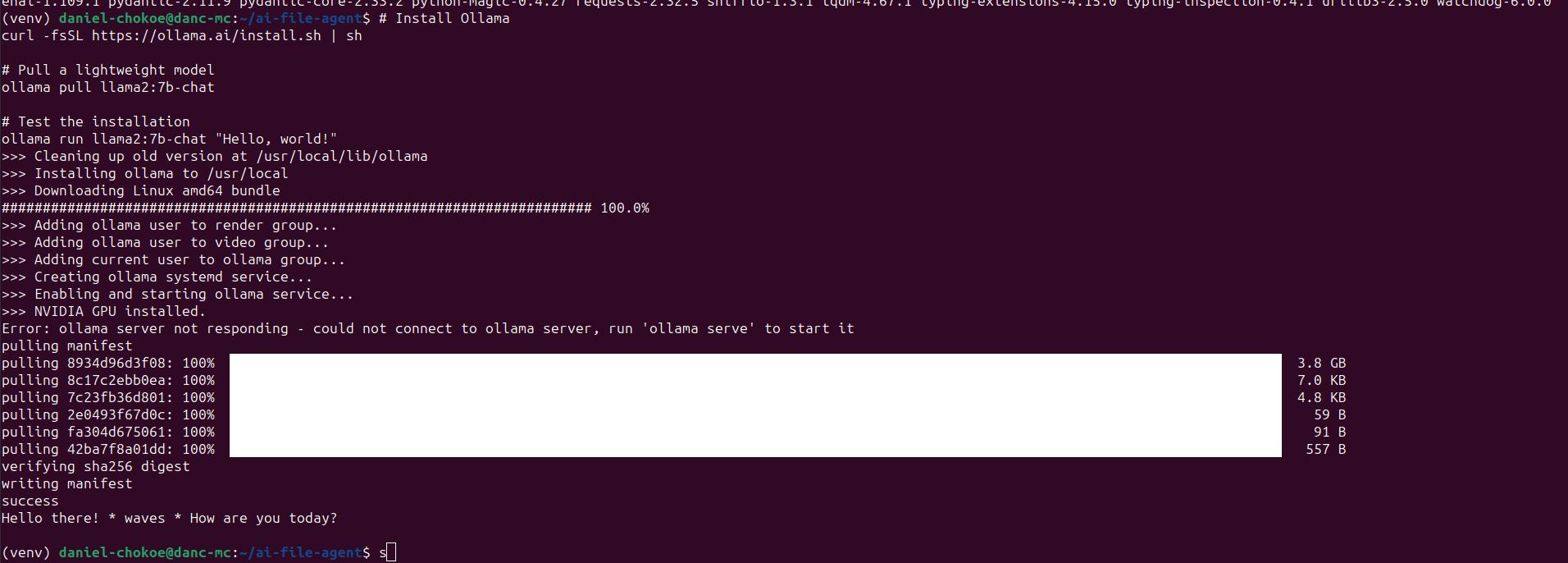

# Install Ollama

curl -fsSL https://ollama.ai/install.sh | sh

# Pull a lightweight model

ollama pull llama2:7b-chat

# Test the installation

ollama run llama2:7b-chat "Hello, world!"Step 3: The AI Agent Code

Here’s our main agent (file_agent.py):

#!/usr/bin/env python3

"""

AI File Organizer Agent

Author: Dan Chokoe

"""

import os

import time

import json

import magic

import requests

from pathlib import Path

from watchdog.observers import Observer

from watchdog.events import FileSystemEventHandler

class AIFileAgent(FileSystemEventHandler):

def __init__(self, watch_dir, organize_dir):

self.watch_dir = Path(watch_dir)

self.organize_dir = Path(organize_dir)

self.organize_dir.mkdir(exist_ok=True)

self.ollama_url = "http://localhost:11434/api/generate"

def query_ai(self, prompt):

"""Query local Ollama instance"""

payload = {

"model": "llama2:7b-chat",

"prompt": prompt,

"stream": False

}

try:

response = requests.post(self.ollama_url, json=payload)

if response.status_code == 200:

return response.json()['response']

else:

return "Unable to process with AI"

except Exception as e:

print(f"AI query failed: {e}")

return "Unable to process with AI"

def analyze_file(self, file_path):

"""Analyze file and determine appropriate category"""

file_path = Path(file_path)

# Get file info

mime_type = magic.from_file(str(file_path), mime=True)

file_size = file_path.stat().st_size

# Read file content (if text and reasonable size)

content_sample = ""

if mime_type.startswith('text/') and file_size < 10000:

try:

with open(file_path, 'r', encoding='utf-8') as f:

content_sample = f.read()[:500] # First 500 chars

except:

content_sample = "Unable to read content"

# Create AI prompt

prompt = f"""

Analyze this file and suggest an organization category:

Filename: {file_path.name}

MIME Type: {mime_type}

Size: {file_size} bytes

Content Sample: {content_sample}

Based on this information, suggest:

1. A category folder name (one word, lowercase)

2. An improved filename (keep extension)

3. A brief reason for the categorization

Respond in JSON format:

{{

"category": "documents",

"suggested_name": "example_file.txt",

"reason": "Brief explanation"

}}

"""

ai_response = self.query_ai(prompt)

# Parse AI response (with fallback)

try:

# Extract JSON from response

start = ai_response.find('{')

end = ai_response.rfind('}') + 1

if start != -1 and end != 0:

json_str = ai_response[start:end]

return json.loads(json_str)

except:

pass

# Fallback categorization

if mime_type.startswith('image/'):

category = "images"

elif mime_type.startswith('video/'):

category = "videos"

elif mime_type.startswith('text/'):

category = "documents"

else:

category = "misc"

return {

"category": category,

"suggested_name": file_path.name,

"reason": f"Categorized by MIME type: {mime_type}"

}

def organize_file(self, file_path):

"""Move and rename file based on AI analysis"""

analysis = self.analyze_file(file_path)

# Create category directory

category_dir = self.organize_dir / analysis['category']

category_dir.mkdir(exist_ok=True)

# Determine new file path

new_path = category_dir / analysis['suggested_name']

# Handle naming conflicts

counter = 1

while new_path.exists():

name_parts = analysis['suggested_name'].rsplit('.', 1)

if len(name_parts) == 2:

new_name = f"{name_parts[0]}_{counter}.{name_parts[1]}"

else:

new_name = f"{analysis['suggested_name']}_{counter}"

new_path = category_dir / new_name

counter += 1

# Move file

try:

os.rename(file_path, new_path)

print(f"✅ Organized: {Path(file_path).name} → {new_path}")

print(f" Reason: {analysis['reason']}")

except Exception as e:

print(f"❌ Failed to organize {file_path}: {e}")

def on_created(self, event):

"""Handle new file creation"""

if not event.is_directory:

print(f"🔍 New file detected: {event.src_path}")

# Wait a moment for file to be fully written

time.sleep(1)

self.organize_file(event.src_path)

def main():

# Configuration

watch_directory = os.path.expanduser("~/Downloads")

organize_directory = os.path.expanduser("~/OrganizedFiles")

print("🤖 AI File Organizer Agent Starting...")

print(f"📁 Watching: {watch_directory}")

print(f"📁 Organizing to: {organize_directory}")

# Create agent and observer

agent = AIFileAgent(watch_directory, organize_directory)

observer = Observer()

observer.schedule(agent, watch_directory, recursive=False)

# Start monitoring

observer.start()

print("✅ Agent is running. Press Ctrl+C to stop.")

try:

while True:

time.sleep(1)

except KeyboardInterrupt:

observer.stop()

print("\n🛑 Agent stopped.")

observer.join()

if __name__ == "__main__":

main()Step 4: Demo Time!

Let’s test our agent:

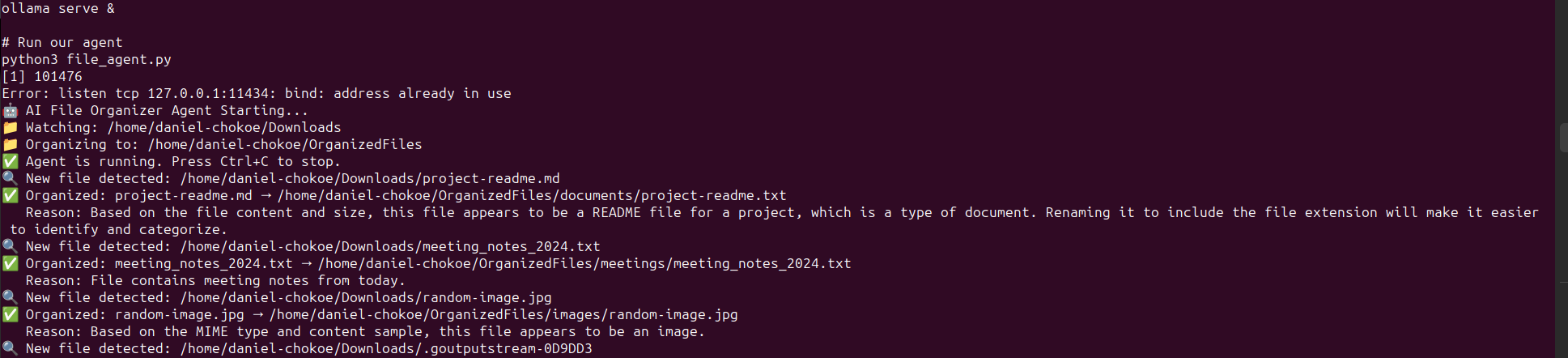

# Make sure Ollama is running

ollama serve &

# Run our agent

python3 file_agent.pyNow, let’s create some test files, open another terminal and run the below commands:

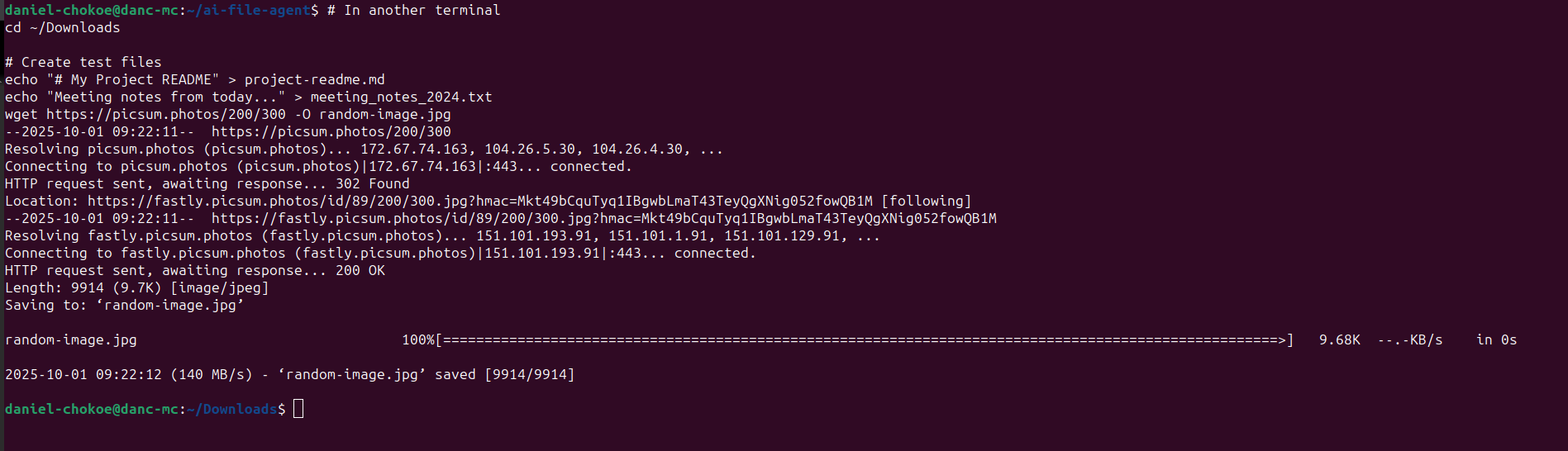

# In another terminal

cd ~/Downloads

# Create test files

echo "# My Project README" > project-readme.md

echo "Meeting notes from today..." > meeting_notes_2024.txt

wget https://picsum.photos/200/300 -O random-image.jpgWhat You’ll See

The agent will:

- Detect new files in ~/Downloads

- Analyze each file using the local AI model

- Create organized folders in ~/OrganizedFiles

- Move and potentially rename files intelligently

Example output:

🔍 New file detected: /home/dan/Downloads/project-readme.md

✅ Organized: project-readme.md → /home/dan/OrganizedFiles/documents/project_readme.md

Reason: Markdown documentation file containing project information

System Integration

To run this as a proper system service:

# Create systemd service

sudo nano /etc/systemd/system/ai-file-agent.serviceAdd this configuration:

[Unit]

Description=AI File Organizer Agent

After=network.target

[Service]

Type=simple

User=your-username

WorkingDirectory=/home/your-username/ai-file-agent

Environment=PATH=/home/your-username/ai-file-agent/venv/bin

ExecStart=/home/your-username/ai-file-agent/venv/bin/python file_agent.py

Restart=always

[Install]

WantedBy=multi-user.target# Enable and start service

sudo systemctl enable ai-file-agent

sudo systemctl start ai-file-agentReal-World Performance Notes

From my testing on a ThinkPad P1 (i7, 32GB RAM):

- Startup time: ~30 seconds (loading the AI model)

- File processing: 2-5 seconds per file

- Memory usage: ~4GB (mostly the AI model)

- Accuracy: Surprisingly good for basic categorization

Next Steps & Improvements

Here are some enhancements I’m working on:

- Multiple model support (different models for different file types)

- Learning from user corrections (feedback loop)

- Web dashboard for monitoring and configuration

- Cloud AI integration as fallback

- Custom rules engine for specific file types

Conclusion

Building AI agents doesn’t have to be rocket science. With local models like Ollama and some solid Python scripting, you can create genuinely useful automation tools. This file organizer has already saved me hours of manual sorting, and it’s just the beginning.

The key takeaway? Start simple, iterate fast, and don’t be afraid to get your hands dirty with the infrastructure side. That’s where the real magic happens.

Dan Chokoe is a full-stack IT engineer with 13+ years of experience in web development, DevOps, and now AI integration. You can find him usually knee-deep in Ubuntu terminals or explaining why “it works on my machine.”